February 25, 2020

How To Compete in a Marathon Match

DURATION

25 mincategories

Tags

share

Contents

Beginning a Match

You should first start off by visiting the Topcoder Events Calendar to find out when the next Marathon Match will be taking place.

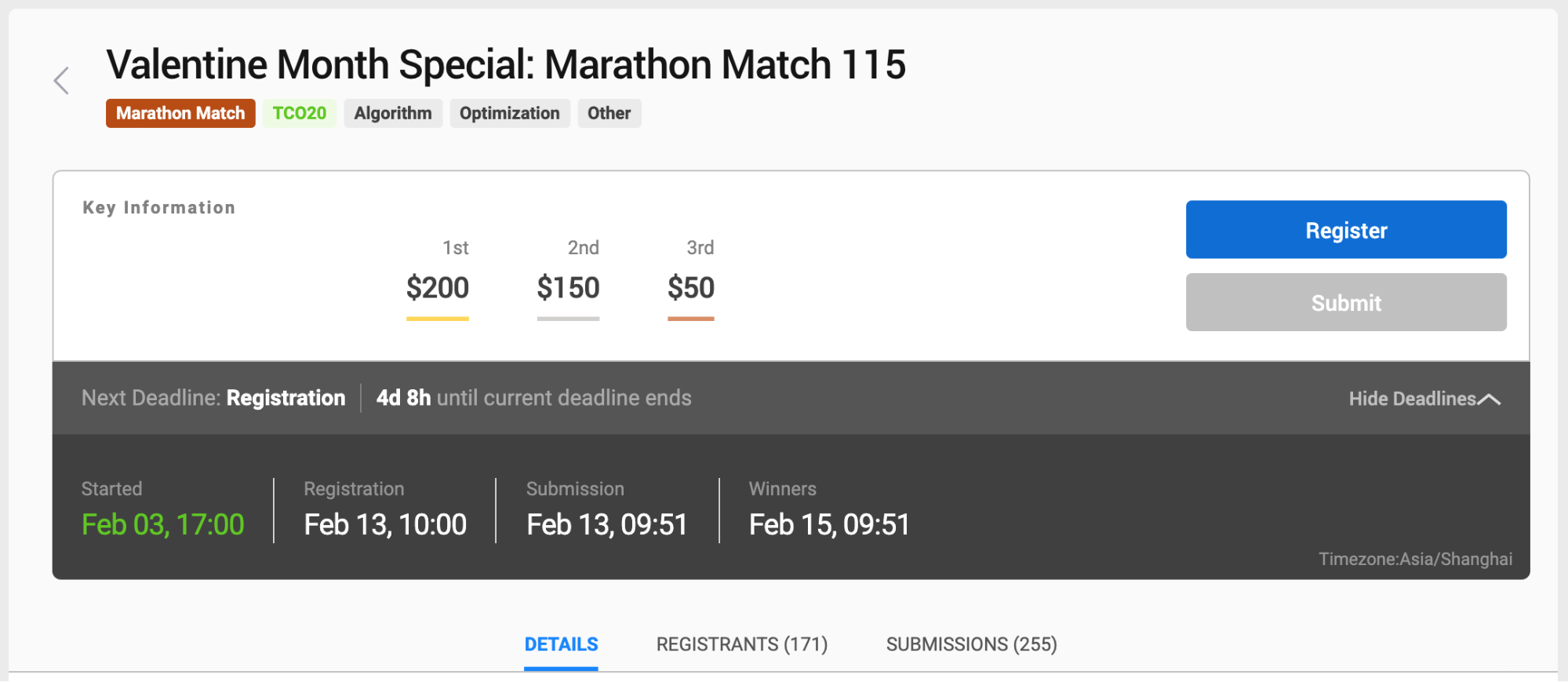

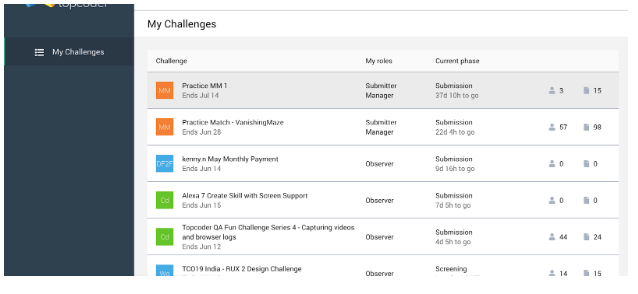

In order to start that match, visit the Marathon Challenge Listings Page, which will show you all current and active competitions where you can view the start/end date of each match, the number of competitors (people who submitted at least once), registrants (people who registered, regardless of whether they submitted or not), and the total number of submissions per competitor.

You can start by clicking on a contest that will take you to the Match Details page, which includes an overview of the competition and its deadline.

The Problem Statement

After you have registered for the match, you will then want to make sure you are on the “DETAILS” tab.

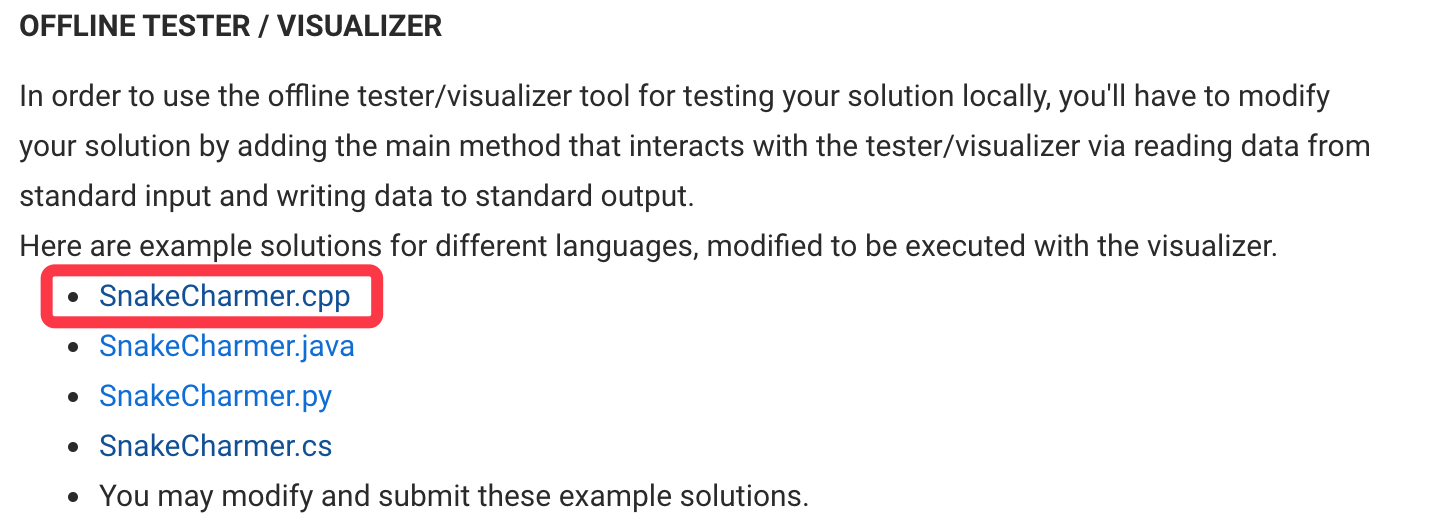

It is here that you can read the problem, know what the task is, how the scoring works, how to submit, and whether you’ll implement a function and submit code to be run and evaluated on the Topcoder servers or submit a list of answers (most likely a csv file) to be evaluated. This page may include examples for the desired output, an offline tester/visualizer to check your submissions locally, information about the prizes (if available), and links to the data (if any).

If you feel like something may be unclear in the problem statement, don’t hesitate to go to the challenge forum and ask there. The forum includes announcements, questions, and discussions about the match.

When you feel like you fully understand the problem at hand, it is time to start coding!

Submitting to the Match

There are three types of submission formats:

Code Without Docker File

In this submission format we have a standard Docker File and you are only required to submit the code file. More information about compilation is available below in the section Other Details About Code Without Docker.

Your submission should be a single ZIP file with the following content:<your code>

e.g.SnakeCharmer.cpp

where SnakeCharmer is the ClassName which is defined in the problem statement.

You can download an example solution, and zip it to submit.

Code Only

Your submission should be a single ZIP file with the following content:

1

2

3

4

5

/code

Dockerfile

flags.txt // optional

<

your code >

The /code directory should contain a dockerized version of your system that will be used to generate your algorithm’s output in both testing phases (provisional and final) in a well defined and standardized way. This folder must contain a Dockerfile that will be used to build a docker container that will host your system. How you organize the rest of the contents of the /code folder is up to you.

e.g. Sample of Code only

Data and Code

Your submission should be a single ZIP file with the following content:

1

2

3

4

5

6

7

/solution

solution.csv /

code

Dockerfile

flags.txt // optional

<

your code >

The file /solution/solution.csv is the output your algorithm generates on the provisional test set. The format of this file will be described in the challenge specification.

The /code directory should contain a dockerized version of your system that will be used to reproduce your results in a well defined and standardized way. This folder must contain a Dockerfile that will be used to build a docker container that will host your system during final testing. How you organize the rest of the contents of the /code folder is up to you.

e.g. Sample of Data and Code

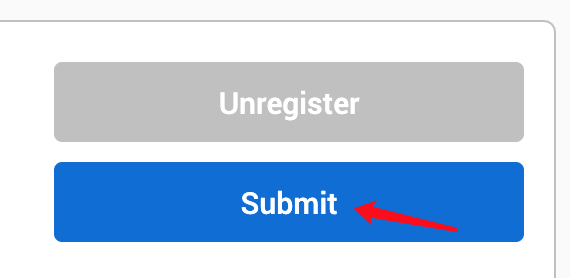

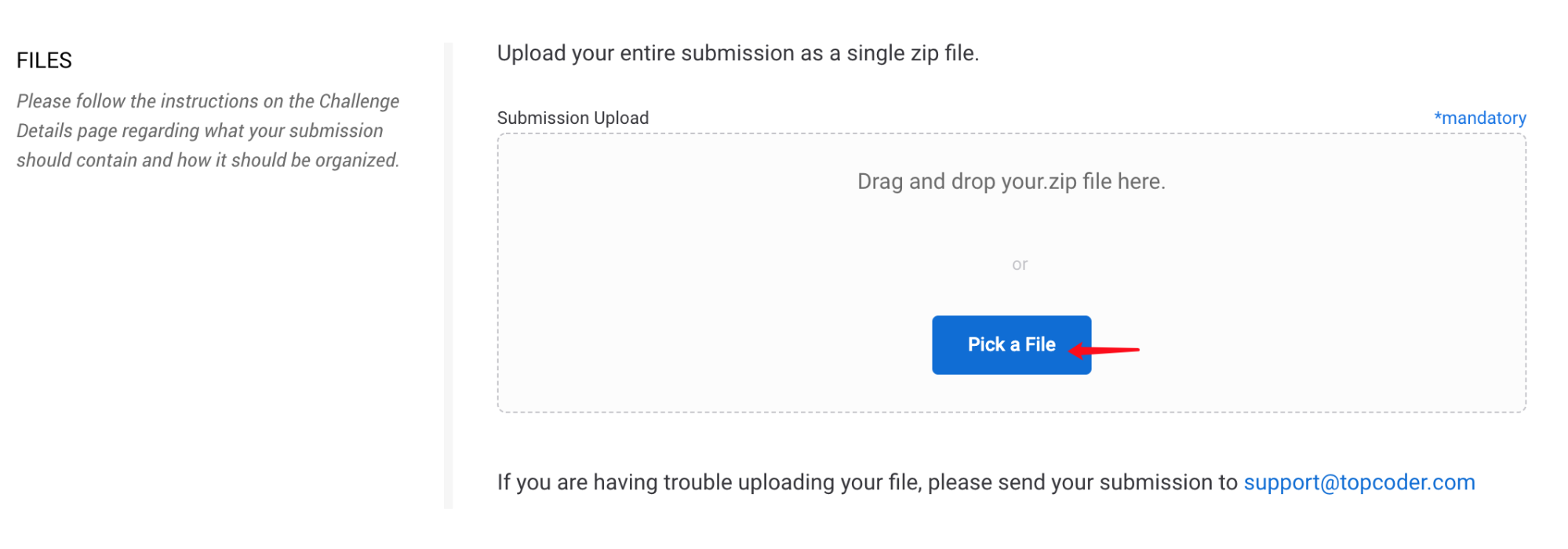

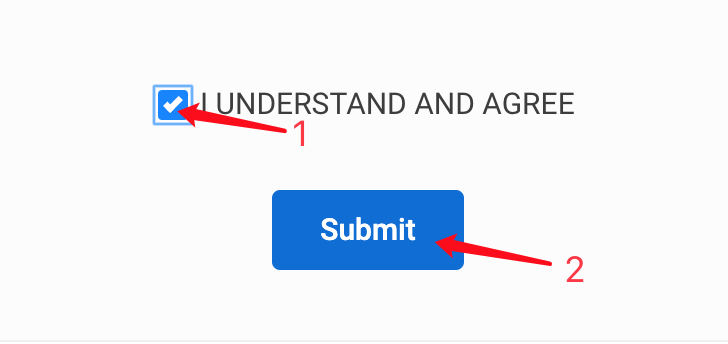

When you finish the task, review the submission instructions in the problem statement and make your submission. You can submit by clicking the “Submit” button on the Challenge Details page, picking a file and choosing “I UNDERSTAND AND AGREE”.

Notification Emails

After submitting your solution, you will receive four emails:

Notification - Thanks for submitting.

Virus Scan - Review Score

A score of 100 means your submission has no viruses or any files with potential threat and is now being forwarded to run on Example Test Cases.

Example Test Case Scoring (Only in the case of Code Without Docker File)

A score of 1.0 means your submission has executed in the example test cases and now has moved to the queue for running on provisional test cases.

Provisional Test Case Scoring (may take up to 20 minutes because there are 100 provisional cases)

Accessing Submissions and Artifacts

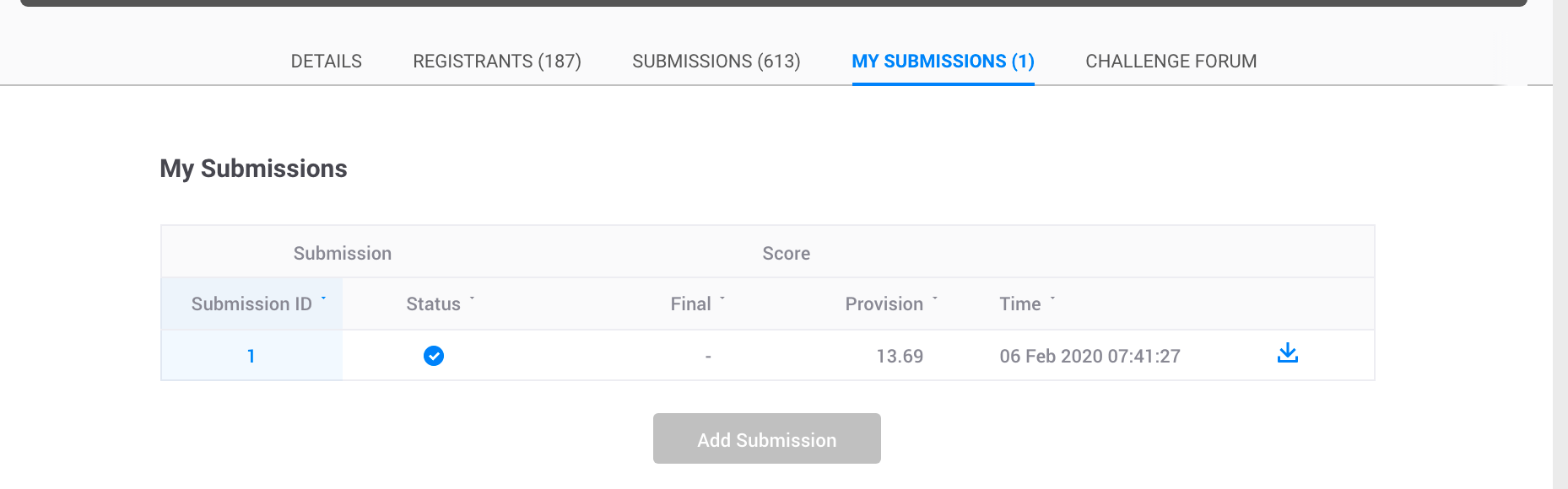

You can access your submissions via the “My Submissions” Tab on the Challenge Page. We are working on making the submission artifacts available here as well.

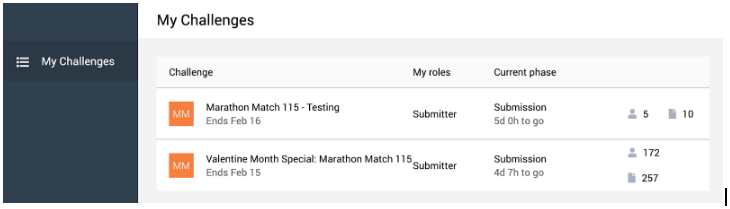

Navigate to the Submission-Review App

Select the match

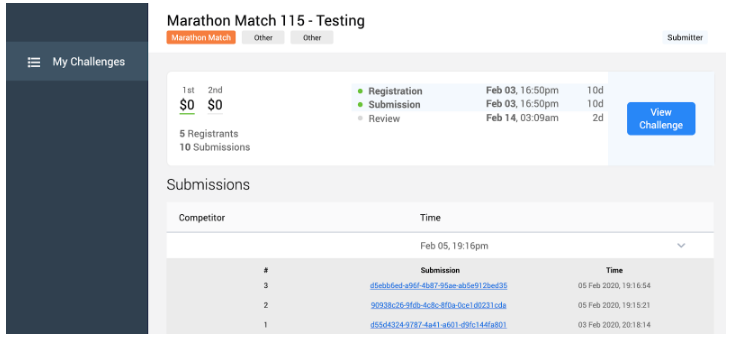

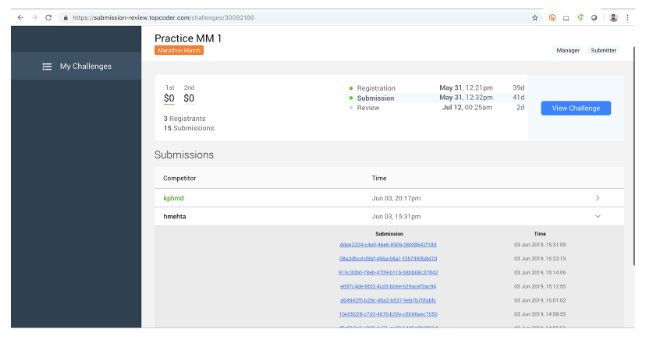

Click on a challenge to view your previous submissions.

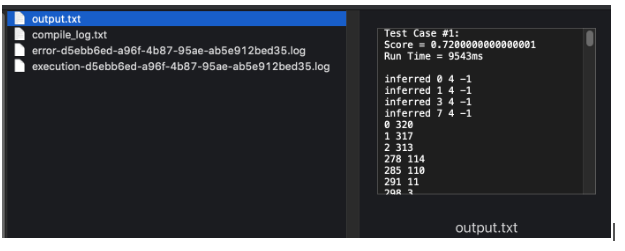

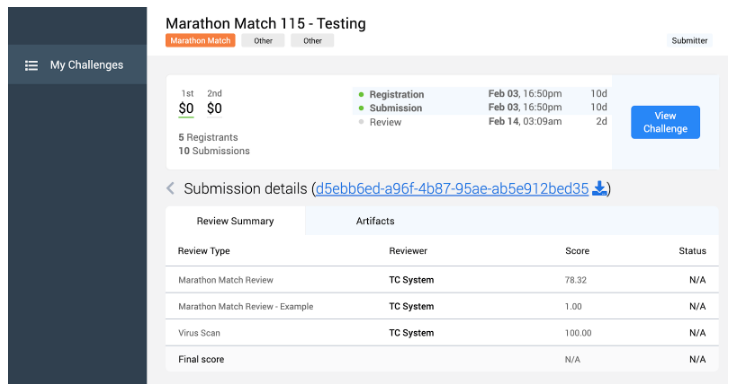

Click on the submission to see the submission details. You will see the following screen:

Click on the Artifacts to see the feedback details. You will see the following screen

Here you have a few choices:

You can click on the download icon button next to the submission link to download your submission

You can click on the download icon button in the Action column to download your submission’s outputs.

After the match is over, you can access other competitor’s submissions. In the Submission-Review App you will be able to see others scores and submissions.

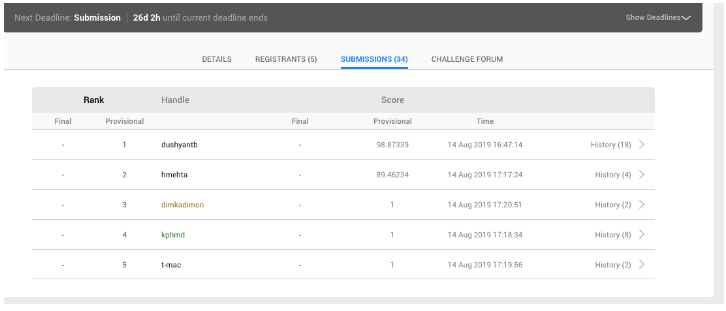

After making your first verified submission, your score will be shown on the standings page along with how many submissions you’ve executed and your current rank on the leaderboard. The standings page shows every competitor’s score and rank, so you are aware of how well your solution performed against others.

After The Match

When the match ends, your code/submission will be evaluated against other test cases to see how well it performed.

Scoring can be either absolute (a scoring metric is used and its output decides the best solution), or it can be relative (submissions are scored relative to the competitor with the highest score or how many competitors you beat on a particular test case).

When the competition ends and the final results are available, you will be able to view both the system and provisional ranking.

Other Details for Code Without Docker

Submission Queue

Your submission will first run on a separate machine getting the example test case output to you as soon as possible. The leaderboard will display your score as a 1.0.

On the main leaderboard if you see a score of 1, that means that the submission is in queue, which also means that the example test case has been executed and now the provisional test cases are running. This means that if you see five members with a score of 1, it means five submissions are in queue. After the provisional phase tests are executed, the score will be replaced by the official score of that submission.

Submission Artifacts

You can also access your artifacts in the Submission-Review App.

Navigate to the Submission-Review App and select the match

Click on a challenge to view your previous submissions.

Click on the “Artifacts” tab to see the feedback.

You can click on the download icon button in the Action column to download your submission’s compile logs, example test case scores, run time, and standard error output.

Compile Logs, Example Test Case Scores, Run Time and Standard Error Output

When you make a submission, it first runs against example test cases on a dedicated machine for speed.

Once that is completed, your submission will be added to the queue on a machine dedicated for running submissions against provisional test cases.

You will receive an email with the text:

The following review(s) were performed:

Marathon Match Review - Example

The score applied to your submission is 1.

A score of 1.0 indicates a successful run of your submission against the example test cases. The match

leaderboard will also display your score as 1.0 until the results of the provisional test cases are returned.

If you head over to the Submission-Review App you will be able to see the output as soon as the testing is complete. You can find your scores, runtime, and stderr output in the output.txt file, which you can find by unzipping the downloaded artifacts file.

Compile and Execution Commands

Your code will be built and executed with the fixed set of commands as follows:

C++

1

2

g++ - std = gnu++11 - O3 / workdir / <FileName>.cpp -o /workdir/<FileName>.cpp

/workdir/<FileName>

Java

1 2javac / workdir / <FileName>.java java -Xms1G -Xmx1G -cp /workdir <FileName>

C#

1 2csc / workdir / ContestOrganizer.cs / out: /workdir/ < FileName > .exe mono / workdir / <FileName>.exe

Python3.6

1

python3 .6 / workdir / <FileName>.py

Processing Server Specifications

Server and Docker Configuration

The solutions are executed on Amazon EC2 c3.large inside a Docker Container with the following configuration:

Ubuntu OS

Download the Docker Base Image

C++ Compiler Version Details

1

2

3

4

5

6

7

8

9

Using built - in specs.

COLLECT_GCC = gcc

COLLECT_LTO_WRAPPER = /usr/lib / gcc / x86_64 - linux - gnu / 7 / lto - wrapper

OFFLOAD_TARGET_NAMES = nvptx - none

OFFLOAD_TARGET_DEFAULT = 1

Target: x86_64 - linux - gnu

Configured with: .. / src / configure - v--with - pkgversion = 'Ubuntu 7.4.0-1ubuntu1~18.04'--with - bugurl = file: ///usr/share/doc/gcc-7/README.Bugs --enable-languages=c,ada,c++,go,brig,d,fortran,objc,obj-c++ --prefix=/usr --with-gcc-major-version-only --program-suffix=-7 --program-prefix=x86_64-linux-gnu- --enable-shared --enable-linker-build-id --libexecdir=/usr/lib --without-included-gettext --enable-threads=posix --libdir=/usr/lib --enable-nls --with-sysroot=/ --enable-clocale=gnu --enable-libstdcxx-debug --enable-libstdcxx-time=yes --with-default-libstdcxx-abi=new --enable-gnu-unique-object --disable-vtable-verify --enable-libmpx --enable-plugin --enable-default-pie --with-system-zlib --with-target-system-zlib --enable-objc-gc=auto --enable-multiarch --disable-werror --with-arch-32=i686 --with-abi=m64 --with-multilib-list=m32,m64,mx32 --enable-multilib --with-tune=generic --enable-offload-targets=nvptx-none --without-cuda-driver --enable-checking=release --build=x86_64-linux-gnu --host=x86_64-linux-gnu --target=x86_64-linux-gnu

Thread model: posix

gcc version 7.4 .0(Ubuntu 7.4 .0 - 1 ubuntu1~18.04)

C# - Mono JIT compiler version

1

2

3

4

5

6

7

8

9

10

11

12

13

Mono JIT compiler version 5.20 .1 .19(tarball Thu Apr 11 09: 02: 17 UTC 2019)

Copyright(C) 2002 - 2014 Novell, Inc, Xamarin Inc and Contributors.www.mono - project.com

TLS: __thread

SIGSEGV: altstack

Notifications: epoll

Architecture: amd64

Disabled: none

Misc: softdebug

Interpreter: yes

LLVM: yes(600)

Suspend: hybrid

GC: sgen(concurrent by

default)

Java Compiler Version Details

1

2

3

openjdk version "1.8.0_212"

OpenJDK Runtime Environment(build 1.8 .0_212 - 8 u212 - b03 - 0 ubuntu1 .18 .04 .1 - b03)

OpenJDK 64 - Bit Server VM(build 25.212 - b03, mixed mode)

Python Version

1Python3 .6 .8 - https: //docs.python.org/3.6/whatsnew/changelog.html#python-3-6-8-final

Setting Up Your Local Environment Similar to the Testing Machine

You will require prior knowledge of Docker to set up an environment similar to Topcoder’s production environment in which your code is executed.

Install Docker:

Create a Project Directory

In your project directory, create a file named Dockerfile and paste the following:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

FROM ubuntu: 18.04

# Install General Requirements

RUN apt - get update && \

apt - get install - y--no - install - recommends\

apt - utils\

build - essential\

software - properties - common

# Install Mono

RUN apt - get install gnupg ca - certificates - y\ &&

apt - key adv--keyserver hkp: //keyserver.ubuntu.com:80 --recv-keys 3FA7E0328081BFF6A14DA29AA6A19B38D3D831EF \

&&

echo "deb https://download.mono-project.com/repo/ubuntu stable-bionic main" | tee / etc / apt / sources.list.d / mono - official - stable.list\ &&

apt update\ &&

apt install mono - devel - y

# Install gcc

RUN apt - get - y install gcc

RUN apt - get update && \

apt - get install - y openjdk - 8 - jdk && \

apt - get clean && \

rm - rf /

var / lib / apt / lists

/* && \

rm -rf /var/cache/oracle-jdk8-installer;

# Fix certificate issues, found as of

# https://bugs.launchpad.net/ubuntu/+source/ca-certificates-java/+bug/983302

RUN apt-get update && \

apt-get install -y ca-certificates-java && \

apt-get clean && \

update-ca-certificates -f && \

rm -rf /var/lib/apt/lists/* && \

rm -rf /var/cache/oracle-jdk8-installer;

# Setup JAVA_HOME, this is useful for docker commandline

ENV JAVA_HOME /usr/lib/jvm/java-8-openjdk-amd64/

RUN export JAVA_HOME

RUN add-apt-repository ppa:jonathonf/python-3.6

RUN apt-get update

RUN apt-get install -y build-essential python3.6 python3.6-dev python3-pip python3.6-venv maven

# update pip

RUN python3.6 -m pip install pip --upgrade

In the same folder where you have your docker file, create the folder structure ./tester/target/

Inside target add the local tester jar provided to you and the submission source code you want to test

Execute the following command:

In the below command you can give your own <docker_image_name>

1 2 3 4$ docker build - t < docker_image_name > -f Dockerfile. $ docker run - it - v tester: /tester <docker_image_name> /bin / bash cd / tester / target /

1java - jar tester.jar - exec "<command>" - seed < seed >

Here, <command> is the command to execute your program, and <seed> is the seed for test case generation. If your compiled solution is an executable file, the command will be the full path to it, for example, “C:\topcoder\solution.exe” or “~/topcoder/solution”. In the case that your compiled solution needs to be run with the help of an interpreter, for example, if you program in Java, the command will be something like “java -cp C:\topcoder\solution”.

Finally, remember that you can print any debug information of your solution to standard error, and it will be forwarded to the standard out of the tester.

Note: You will see an exception because some of the fetched dependencies from the maven repository are meant for the execution on Topcoder’s System. Please ignore that exception. Your individual scores will be added to output.txt in the workdir.

Multithreading

The docker container is restricted to use 1 core for each submission, so multithreading might not help you much.